I’m not a robot: a review of Futureproof

Making meaning in the AI age

With the recent hoopla around AI, I decided to explore the topic holistically by reading Futureproof by Kevin Roose. It was a great read.

TLDR: My main takeaway is that by being more human we become more futureproof. Don’t compete with machine intelligence on the machine’s turf; be irrefutably human. This can manifest itself in a variety of ways, which I’ll cover. But first, I’ll discuss the most thought-provoking ideas in the book though this is not meant to be an exhaustive review.

but first, coffee the bad news

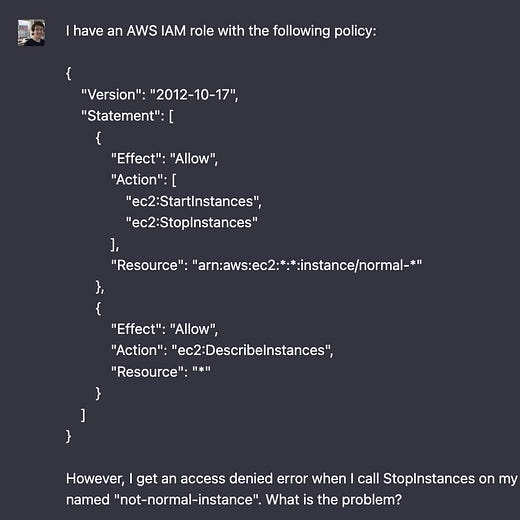

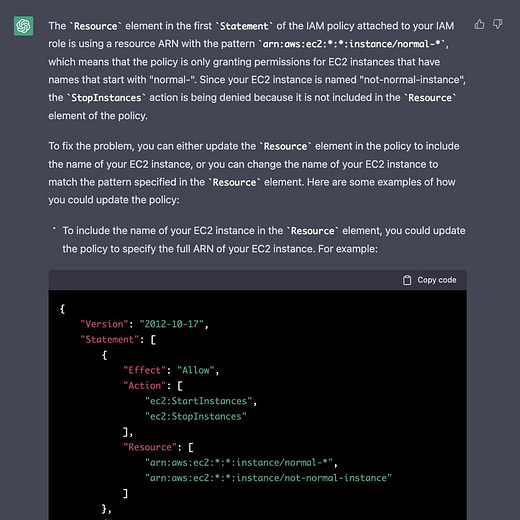

I found Roose’s summary of how AI will displace workers enlightening because it’s not the doom-and-gloom we’re accustomed to hearing. Instead, it’s pragmatic, but nevertheless concerning. Roose suggests that widespread adoption of AI won’t cause sudden mass unemployment. Rather, the effects of automation will occur gradually and often be unnoticed. Specifically, the increased productivity leverage AI affords will gradually result in organizations requiring fewer workers, and a lower barrier to entry for competency (eg tasks that require less experienced workers). Relatedly, new organizations will need fewer workers to compete with incumbents in existing markets. Anecdotally, I’ve thought about this with software development. Why hire a DevOps specialist when ChatGPT can help debug something as arcane as IAM policies?

credentials who?

There’s good news though!

Roose argues that in the future, things like credentials and titles will be valued less compared to human qualities. This is of course the result of the cost of basic cognition trending towards zero.

You can write code without being a developer, you can write copy without being a copywriter, etc. The use-cases for permission-less, credential-less creation abound.

Personally, AI has made it trivial for me to dive into unfamiliar domains. For example, I was able to interrogate ChatGPT about linear optimization, a topic I last studied a decade ago. Now, truth be told, it sent me down some rabbitholes with its sometimes faulty information, but it did allow me to get started and iterate so fast that it didn’t matter.

on being “more human”

Put yourself in positions where you can express your humanity, and where there can be no mistaking you for a robot.

Ok, now for the good stuff.

The book covers a variety of qualities that make human labor inherently or comparatively more valuable than machine cognitive labor. The categories I formed in my head can be broken down roughly into the following: social, skepticism, and creativity.

whatcha talkin bout

For the time being, AI and machine intelligence will not be able to interact or relate on a human level, therefore social skills are a great differentiator. Some of the examples cited in the book include service industry jobs like bartending, haircare and mental healthcare. The machines do not yet possess empathy and other relational skills that make human interaction (generally) pleasant.

Knowledge workers also stand to benefit from sharpening their soft skills. An engineer that can communicate effectively with non-technical folk is immensely more valuable than a savant that cranks out lines of code because AI can generate code faster, cheaper and reasonably accurately. Personally I found the callout to code-switching especially enlightening; (most) humans are particularly adept at reading social cues that make communicating in different and ever-changing groups substantially easier. Though, AI can code-switch if prompted effectively…

Relatedly, people that can elicit emotional responses are more futureproof:

A good general rule is that jobs that make people feel things are much safer than jobs that simply involve making or doing things.

As a result, I believe that entertainers that create authentic or world-class experiences will be just fine. I suspect that there will be a psychological bias at play when evaluating the value of entertainment too; as noted in the book, hoards of people consume artisanal goods today that have mass-market alternatives, like coffee, or the things people sell on Etsy.

It should be noted that AI systems that cooperate with humans are being actively researched, though the long-term economic and social effects are not yet understood.

don’t believe everything you read online

Instead, I prefer to talk about “digital discernment,” which reflects the fact that learning to navigate our way through a hazy, muddled online information ecosystem is a continuous, lifelong process that changes as technology shifts, and as media manipulators adapt to new tools and platforms.

Roose discusses that skepticism in the form of “digital discernment” will be a critical skill in the age of machine intelligence. I think this argument strongly tracks the inevitable consequences of the technology we’re seeing today. As tools like ChatGPT allow people to mass-produce content cheaply and quickly, there will be a deluge of content. It follows then, that people will be well-served by being able to question, and integrate online content effectively because oftentimes content will be misleading or false.

I realized that these skills are not particularly new, though are more critical than ever before. I recall using the internet as a kid in the pre-web 2.0. I quickly learned that people write patently false information (gasp), misrepresent their identity 😱, and engage in other deceptive tactics. I also remember with the advent of Wikipedia, teachers would emphasize that thorough research involved corroborating claims across different sources, and from different points of views. In retrospect I’m grateful for having grown up as a digital native, but one which also experienced the transition to the digital economy because it afforded an organic development of digital discernment. I acknowledge however that the current environment is suboptimal in engendering these critical thinking skills, and factors have conspired to make misinformation worse, but that’s the topic for a separate post.

Even when AI is not deployed to the public, digital discernment will be critical for anyone attempting to leverage machine intelligence, because LLMs like ChatGPT are susceptible to hallucinations (aka straight up lies that seem plausible). I also think digital discernment is critical to getting the most out of AI. Interrogating ChatGPT for knowledge, Socratic method-style yields amazing results, except when it stubbornly refuses to be wrong…

reframing creativity

An underlying theme in Futureproof centers around the idea that people are still required to bring ideas to life. Rule 1 in the book is “Be Surprising, Social, and Scarce”, and Rule 4 is “Leave Handprints”. These rules, along with the others center around not competing on machine terms; for example, doing something highly repetitive and standardized is ripe for automation-based disruption. My interpretation of this is that we should not define creativity in machine terms and that it is well within the domain of humanity, though it now manifests differently.

Sure, a tool like Midjourney can produce beautiful art, but it still requires a human with a creative impulse to “autocomplete” their inspiration. I think this requires reframing creativity as not being strictly bound by creative execution, like drawing, writing prose or writing code. Creativity under this regime requires a human in the loop to inspire, curate and refine AI’s creative execution. A human in the loop is also currently a legal prerequisite because AI cannot own a copyright, though of course this will be litigated in years to come.

conclusion

I thoroughly enjoyed Futureproof, and it’s helped refine my thinking on the implications of AI. I deliberately did not cover everything in book. One part that I may share in a future post is about making my own personalized “futureproof plan”. This part and the general tone of the book instilled a pragmatic optimism that’s a breath of fresh air in a world where writing on technology is either alarmist or negligently naive. I encourage others read it, to start making meaning in the exciting times ahead.