Stop Interviewing for the Last War

Why Employers Should Test AI Fluency, Not Detect Cheating

A friend recently overhauled his entire hiring process. Not because of budget cuts or growth pivots, but because hundreds of fake applicants flooded their pipeline, some even using AI to impersonate real people during calls.

The problem wasn’t that candidates used AI. It was that some hid it when asked directly.

There’s a clear ethical line here. Using AI to augment your work? Smart. Hiding AI use when asked point-blank? Dishonest. Not using AI when everyone else does? Career limiting.

While companies play whack-a-mole with “AI cheaters” their competitors are hiring AI-augmented superstars who are upfront about their tools. The real question: How well do you use AI, and are you honest about it?

The New Baseline

The market has moved. 88% of organizations now use AI regularly, up from 78% a year ago. This isn’t just tech companies. Marketing saw the biggest adoption increases, and 62% of organizations are experimenting with AI agents.

I’ve watched this play out in teams I work with. Strong engineers with solid fundamentals leverage AI to become even more effective. Less experienced developers copy-paste AI-generated code without understanding the implications. The difference? The first group has the judgment to know when to trust AI and when to trust themselves.

This is the amplification effect: strong fundamentals plus AI equals 10x improvement. Weak fundamentals plus AI equals scaling bad judgment. Engineers with liberal arts backgrounds often excel here because they’ve been trained to think critically about context, a meta-skill that matters more than ever.

You’re screening for effective AI users who understand the tool’s role, not just anyone who’s touched ChatGPT. Bonus: people who hide AI use probably lack the judgment you need anyway.

What to Actually Test

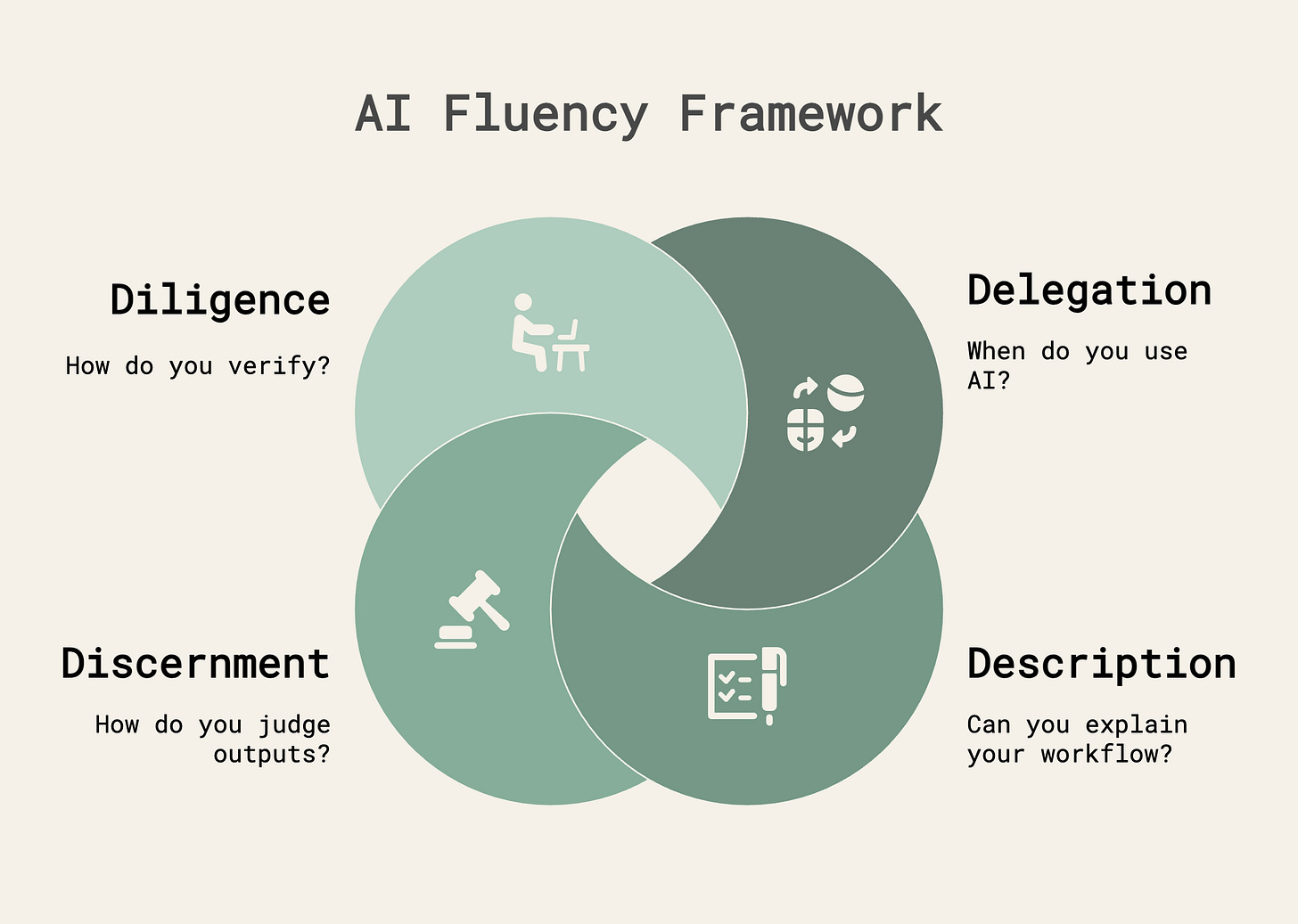

Instead of asking “did you use AI?”, test for the four dimensions that matter. Anthropic’s identifies these as Delegation, Description, Discernment, and Diligence.

Delegation: “Tell me about a time AI failed you”

This tests their mental model of AI’s limitations. Watch out for “AI can do everything” (naïve) or “AI is useless” (stubborn). Look for candidates who know when to use automation versus augmentation versus staying hands-on. “I use Claude for planning, Cursor for implementation, but always review architecture decisions myself” is exactly what you want to hear.

Description: “Walk me through your workflow for <relevant task>”

You’re testing orchestration and communication clarity. Good candidates use multiple tools strategically: “I start with Perplexity for research, move to Claude for synthesis, then validate key claims manually.” Watch out for people using one tool for everything or being unable to articulate why they chose specific tools.

Discernment: “Show me something you shipped. What did AI contribute versus you?”

This tests critical evaluation. I call this the $100/month test: ask what AI tools they pay for personally. It shows real commitment beyond casual use. Look for candidates who can explain what they verified and where they added strategic value: “AI drafted the code, but I redesigned the data model because it missed the scaling implications.”

Diligence: “How do you verify AI outputs?”

Ask them to walk through checking an AI-generated technical spec. Strong candidates demonstrate source triangulation (”I check the AI’s claims against official docs”), metacognition (”I know I don’t know X, so I verify those sections more carefully”), and ethical consideration (“I always check if the solution creates accessibility issues”).

Put It Into Practice

For technical roles, give them pre-written AI code with subtle bugs. Tell them: “You have full AI access. Fix this and explain what you’d deploy to production.” This tests whether they can debug, if they blindly trust AI fixes, and if they understand the underlying system.

For non-technical roles, provide AI-drafted content that’s 80% good and ask them to improve it for your target audience. Can they identify what’s generic versus strategic? Do they add business context AI missed?

Universal questions that work across roles:

“What’s changed in your AI workflow in the last year?” (The field moves weekly; they should move fast too)

“Name an AI tool you tried and abandoned. Why?” (Shows discernment, not just adoption)

“How would you help a skeptical colleague adopt AI?” (Tests if they can be an AI champion)

The honesty test is simple: ask what AI tools they used to prepare for the interview. You don’t care if they used AI. You care if they’re honest about it.

Why This Matters Now

Only 33% of organizations have scaled AI beyond pilots, despite 88% using it. The most in-demand roles are software engineers and data engineers, precisely where AI amplification matters most.

Organizations with AI champions see 80% success rates versus 37% without. High performers in McKinsey’s research (those seeing 5%+ EBIT impact) are 3x more likely to have redesigned workflows around AI, not just adopted it.

Here’s the moat: hire one AI-fluent person, they transform 10-20 colleagues, and your whole department levels up.

The Takeaway

Ask how well candidates use AI and whether they’re honest about it. The days of hiding AI use are over.

The next generation of top performers won’t hide their AI fluency. They’ll demonstrate it. Your job is building interview processes that surface this competitive advantage.