A bird's eye view of the competitive landscape in AI part 2

Do all paths lead to OpenAI?

In the previous article, we began our dive into the AI competitive landscape, and covered foundries, chips and cloud providers. In this part, I continue that discussion with foundation models. I explore the competitive factors that impact the development of these models. I’ll close with what I believe are the consequences for builders.

Reading the first part is not necessary, but provides a good foundation (I couldn’t resist the pun-portunity).

foundation models

Quick recap: A foundation model is a type of AI model that has been pre-trained on vast amounts of data and can be used as a starting point for building more specialized AI models. It serves as a foundation for more complex and specific models.

Think of it as a starting point that has already learned some fundamental concepts, making it easier and faster to create more advanced AI models that can solve specific problems. By building on top of a foundation model, developers can create more powerful AI applications that are better at understanding and interpreting complex data.

leading models

On the language/text front there are several leading foundation models (LLMs):

GPT-3 by OpenAI

GPT-3 is an ancestor of the model that ChatGPT uses.

GPT-4 is slated for a public 2023 release, and is rumored to power Bing AI.

LaMDA (or a variation of it) by Google is planned for March 2023.

Claude by Anthropic, which was founded by former OpenAI researchers, is in limited release.

On the image generation front the leading models are:

MidJourney (though it does not have an API yet)

Notably, it’s also possible to train and run your own foundation models:

Stable Diffusion is open source and can be run on inexpensive consumer hardware.

MosaicML helps companies train large models of their own.

A variety of models are freely available on HuggingFace (though many are not foundation models, as they are more narrow in scope).

different strokes

Those lists are not exhaustive, but it’s clear there’s a ton of options for foundation models.

Foundation models, like all engineering endeavors, must make tradeoffs. As a result, organizations will inevitably compete across several axes that address different market demands including:

model strength: to what extent can models satisfy a request? can the model help get what I need done? Can it do it with minimal preparation and preamble?

inference costs: can I use the model economically for a certain use-case? For example, is $1 for a complex SQL query worthwhile for my business?

privacy: can I use this model with my private data and/or my infrastructure? Will I have to use a public API or public cloud (and by extension place trust in a third party)?

performance/UX: can this model be integrated into my existing workflows with responsive-feeling latency? Large models tend to be slower at inference as well as cost more, so bigger isn’t always better.

portability: can I use the model at the edge (eg smartphones, IoT devices)? This is related to privacy and performance/UX, but distinct. A small self-hosted model may be less powerful but can run on a consumer’s phone, vastly expanding the number of use-cases. Conversely, some model offerings are designed as on-premises solutions for enterprises.

safety + alignment: are the models built with alignment in mind? If so, is the alignment methodology consistent with my needs? Though we’re in the early innings of wider AI adoption, these issues have already stoked skepticism, and these issues will not easily be solved.

human capital

Unsurprisingly, the proliferation of different foundation models is a function of open research, and the free movement of human capital.

Some noteworthy events:

Researchers at OpenAI went on to found Anthropic, with a different approach to safety and alignment. Anthropic went on to raise $300 million from Google.

Google published the initial paper on transformers, which is at the core of the leading LLMs. Many of the original authors of the transformer paper, Attention is all you need, have since left Google, with some starting AI companies of their own.

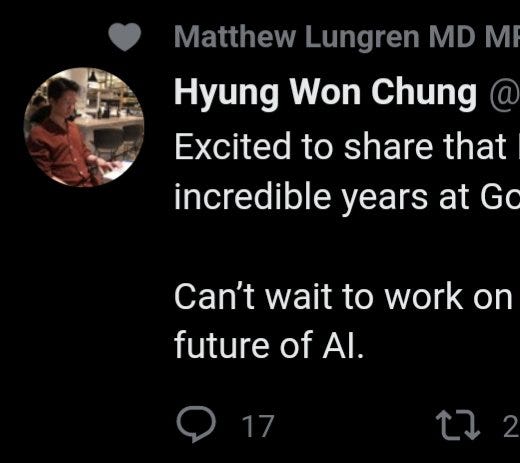

Most recently OpenAI has hired other Google AI researchers:

The diffusion of human capital is reminiscent of the origins of Silicon Valley, whereby:

Shockley Semiconductor lead to the formation of Fairchild Semiconductor

Fairchild lead to Intel & AMD

Nvidia’s founders partially came out of AMD

This diffusion is healthy and accelerates competition and innovation.

the technology

Innovation in AI is not limited to the leading firms I previously discussed.

AI research is accelerating:

There’s cacophony of new developments that have democratized access to AI. I’ll list a handful.

Training your own Stable Diffusion model started at $600k, and has decreased to $125k while Stable Diffusion has gotten more powerful:

Researchers have decentralized training of an LLM, in the case of BLOOM:

DeepMind’s Chinchilla indicates that LLMs can be drastically improved by being more efficient and scaling training data as opposed to model size. Admittedly, this model is still non-trivial to train (it consists of 70 billion parameters), but it’s findings are promising: the model outperformed models several times larger.

Other initiatives that optimize efficiency are propagating, including nanoGPT, which replicates the performance of GPT-2 on a fraction of the compute.

Furthermore, there’s active research in the following areas, which will lower the barrier to entry in training sophisticated models:

Model compression: refers to a set of techniques that can reduce the size of a neural network model by removing unnecessary or redundant parameters. These techniques can include pruning, where weights or connections with low importance are removed, and quantization, where the precision of numerical values is reduced. By reducing the size of the model, training and inference can become faster and more affordable.

Knowledge distillation: is a process where a large and complex model is used to train a smaller and simpler model. The smaller model learns to mimic the behavior of the larger model, allowing it to achieve similar performance while using fewer resources.

Transfer learning: using pre-trained models as a starting point for a new model. By leveraging the pre-trained knowledge of a larger model, the smaller model can achieve good performance with less data and training time.

Overall, a bunch of innovation is happening outside of the leading firms described in the previous section, and the pace of innovation is accelerating.

so what?

I’ve presented another swath of the AI landscape, and now I’ll briefly describe some of the implications for builders, and the broader software ecosystem.

In the medium term, I think there will be monopolistic competition in state-of-the-art (SOTA) models . There will be differentiation in the capabilities of said models, and there won’t be direct price competition. Builders will have their pick of the litter for whatever use-case they require.

Long-term, I largely agree with Elad Gil’s conclusion that the market will become an oligopoly similar to cloud computing, though I think niche open source models will play an important role too.

The pace of research and innovation will provide a fertile ground for non-SOTA yet useful models, which will provide immense value to the software ecosystem writ large; Not every use-case requires the latest and greatest.

As a corollary to 2, there will be useful models that can run at the edge. This will grow the total addressable market (TAM) for AI applications.

This is already happening; Stable Diffusion can run natively on Apple Silicon Macs.

Putting these together, leads to a rather boring meta-conclusion: building AI applications will largely be similar to traditional applications, in that building a defensible product is unlikely to depend on the technology itself. Defensibility as a result of the conclusions above is something I’ll likely cover in future posts.

Let me know what you think of these conclusions in the comments below.