AI's Going Up, Prices Are Going... Wait, What?

In my last post, I discussed how the current AI wave mirrors historical tech trends, with over-investment in infrastructure preceding substantial profits. Today, let's explore another exciting aspect of this AI revolution: the dramatic price drops. These plummeting costs aren't just a footnote—they're a hallmark of truly transformative, paradigm-shifting technology.

a walk down memory lane

To put this in perspective, let's revisit a classic example: computer storage. Remember when digital memories came with a hefty price tag? I certainly do.

Back in 2005, I shelled out $100 for a 1 gigabyte flash drive—and felt like I was living in the future. Fast forward to 2024, and that same Benjamin can snag you a micro SD card smaller than your fingernail, packing a whopping 1 terabyte of storage. We're talking a 1000x increase in capacity for the same price, in a fraction of the size, in just two decades. Mind-boggling, right?

the price is (rapidly) right

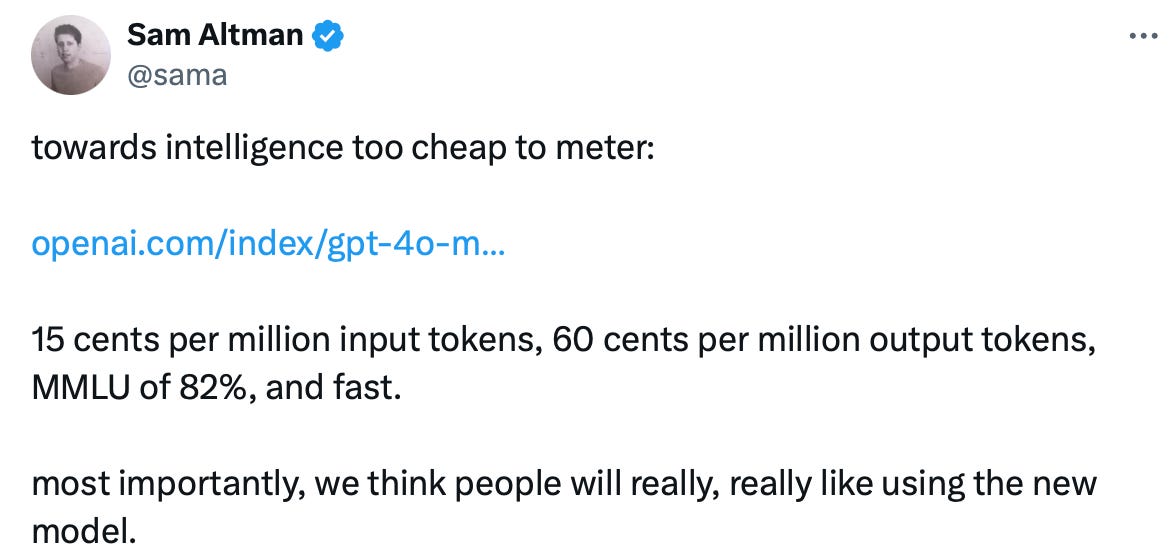

Now, let's turn our attention to LLMs. We're witnessing a similar price plunge, but at a pace that makes even Moore's Law look sluggish:

2022: Each ChatGPT query cost several cents

2023: Costs dropped to less than 1 cent per query

2024: We're approaching models that are almost too cheap to meter

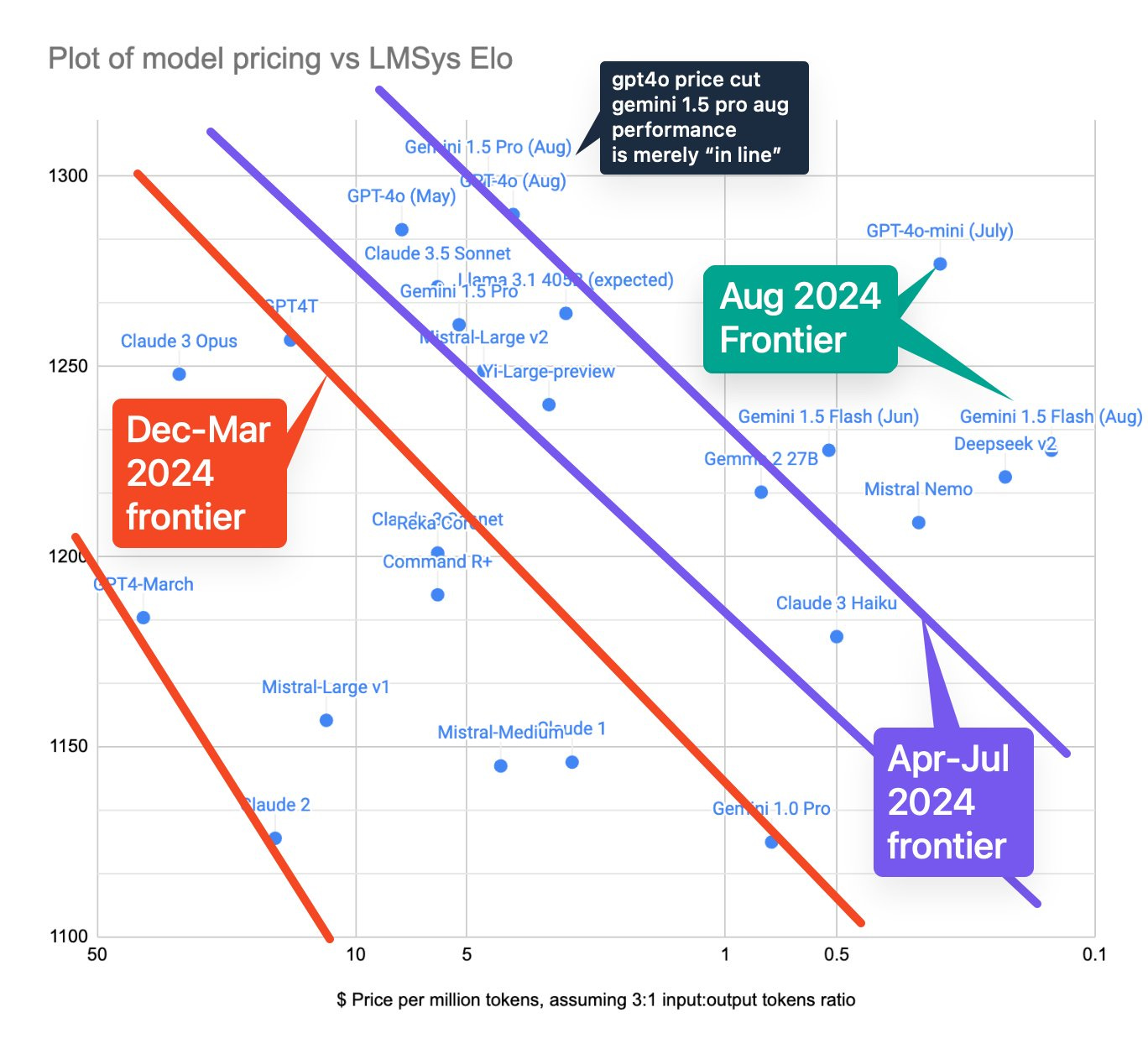

The following graph tells a compelling story. It plots each model's cost per million tokens against its LMSys Elo ranking (a chess-like rating system for AI language models). The trend is clear: newer models are rapidly becoming both better and cheaper.

A quick guide to reading the graph:

X-axis: Cost per million tokens (reverse scale, cheaper to the right)

Y-axis: LMSys Elo rating (higher is better)

Colored lines: Different cohorts of models

Ideal position: Top-right corner (high performance, low cost)

The exciting part? Newer models are consistently pushing towards that ideal top-right corner, month after month. In an ideal world, a model would occupy the rightmost and topmost point on the graph (make number go up!). And that's exactly where newer models are headed, on a month-to-month basis. Up and to the right, baby!

Credit: swyx

beyond model makeovers

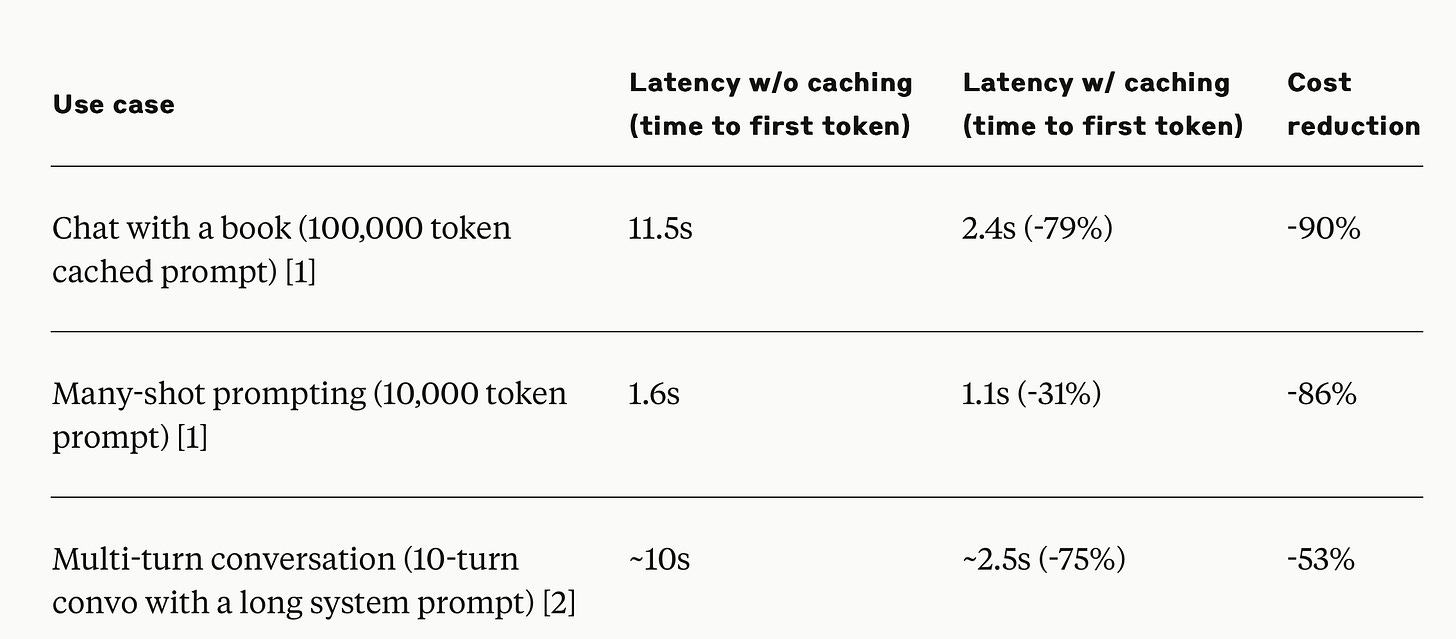

It's not just about overhauling entire models. Sometimes, it's the subtle engineering improvements that make a big difference. Take Anthropic's recent beta release of prompt caching (following Google's Gemini Pro from Spring '24). While not a universal cost reduction, it represents a significant saving for many use-cases:

These tweaks might not grab headlines like a brand new model, but they're quietly revolutionizing how we use AI.

the ripple effects

These rapid price drops aren't just about saving a few bucks. They're reshaping the landscape of what's possible:

Democratization of AI: This technology can now reach and benefit a broader spectrum of society.

Unlocking New Frontiers: Applications that were once cost-prohibitive are now within reach.

Accelerating Innovation: As AI becomes more accessible, it fuels further technological advancements. Imagine using Claude as your junior software engineer 100x more often—that's a lot more software getting written.

a paradigm shift

So what does all this mean for the future? While it's impossible to predict with certainty, these plummeting costs and skyrocketing capabilities are likely to reshape industries in ways we're only beginning to imagine. Let's consider one particularly intriguing possibility:

We're potentially witnessing a reverse industrial revolution in software. Instead of moving from bespoke goods to mass production, we're transitioning from mass-distributed software to personalized, bespoke solutions for everyone.

Imagine a world where everyone is a software engineer, armed with LLMs too cheap to meter. The result? Software purpose-built for individual needs becomes the norm, not the exception.

That's not just an incremental change—it's a paradigm shift that could reshape the very structure of our society. And it's all being driven by these plummeting AI costs.

So, as we watch these prices fall, remember: we're not just seeing numbers change on a graph. We're watching the future unfold, one cheaper token at a time.