The AI Gold Rush

Big Spending, Little Profit (So Far)

Recently, there's been a lot of discussion about AI infrastructure spending over the past two years. It's concerning that AI isn't making nearly as much money as companies have been pouring into it. Yet, major tech firms are doubling down, with plans to invest about $200 billion in AI this year alone. What's really happening here? Are we watching an AI bubble about to pop?

Déjà Vu All Over Again: Tech's History of Jumping the Gun

This situation actually follows a common pattern in tech. New technologies often see heavy investment in infrastructure before useful applications catch up - we've seen this seemingly over-the-top spending many times in tech history.

Fiber Frenzy: When the Internet Got Way Too Much Pipe

Let's look back at the late 90s during the dot-com boom. Many companies spent huge sums laying fiber optic cables, betting on a surge in internet use. At the time, most home users had dial-up connections crawling at just 56 kbps. The problem? There weren't enough uses for all this new bandwidth yet. This led to a 'bandwidth glut'. Eventually, applications like video streaming did make use of this infrastructure, but only years later – after the dot-com bubble had burst.

Data Center Dilemma: Build It and They Will... Eventually Come

In the mid-2000s, we saw a similar trend, but this time with data centers – and it was all about business customers. As companies rushed to digitize their operations, tech giants poured billions into constructing massive data centers. They anticipated a surge in demand for cloud computing and data storage. However, just like with fiber optics, the applications lagged behind. Many of these data centers sat partially empty for years, running at low capacity. It wasn't until the late 2000s and early 2010s that cloud services, big data analytics, and eventually AI workloads began to fill these spaces. Once again, the infrastructure was built well before its full potential could be realized.

Even the 1800s Had Tech FOMO

Now for a curveball – let's dial back to the 1800s. Think this overbuilding trend is just a computer-age thing? Hold onto your top hat. Telegraph companies went on a wire-stringing spree, laying thousands of miles of lines alongside railroads. At first, these wires mostly carried business news – your average Joe wasn't exactly itching to tap out morse code. It took years for telegraph use to boom and turn profitable. This 19th-century example shows that jumping the gun on infrastructure isn't just a modern quirk – it's practically a tech tradition.

Overbuilding Through the Ages

This cycle of building big before the demand catches up is a recurring theme in tech history. We've seen it with railroads in the 1800s, radio networks in the 1920s, and even the early days of electricity distribution. More recently, we've witnessed similar patterns with 3G and 4G mobile networks, and the rush to build satellite internet constellations. Each time, visionaries build infrastructure anticipating future demand, often facing skepticism and financial strain before their bets pay off. AI's current trajectory? It's just the latest verse in a very familiar song.

Big Tech's Billion-Dollar Bet: Spend Now or Regret Later

While history gives us one lens to view the AI investment boom, there's another crucial factor at play: the cutthroat world of big tech competition. In this high-stakes game, the mantra seems to be "build it before your rival does."

These tech giants are sitting on mountains of cash, but here's the twist: strict antitrust regulations are making traditional acquisitions tricky. So instead, we're seeing creative workarounds like the deals with Inflection and Character – think of them as acqui-hires on steroids.

But why the rush? It's all about staying in the race. Imagine being the company that misses the next big AI breakthrough because you skimped on computing power. Or watch helplessly as a competitor with beefier AI capabilities starts eating your lunch across various markets. Even worse, picture having to rely on your rival's AI services because you didn't build your own. For this reason, Meta has made open source AI a strategic priority.

This isn't just about keeping up with the Joneses. It's about avoiding a technological gap that could take years to close. In the fast-paced world of AI, falling behind even for a few months could mean game over. So while the spending might look excessive now, for these companies, it's a calculated bet on staying relevant – or even dominant – in an AI-powered future.

The Nuances of AI Investment: Where to Put Your Chips

Now, while this cycle of overbuilding infrastructure before demand catches up is as old as the telegraph, the AI landscape isn't just one big homogeneous blob. Some areas are already bursting at the seams with investment, while others are still crying out for more attention. Let's break it down:

Hardware: The Chip Race Heats Up

On the hardware side, we're seeing a gold rush in dedicated chips for AI inference. Why? Because these bad boys can seriously slash the cost of running AI models. Take Groq, for instance – they're making waves with their lightning-fast inference engines. But they're not alone in this race.

The cloud giants aren't sitting on their hands either. Google's been flexing with their Tensor Processing Units (TPUs) for a while now, and Amazon and Microsoft are jumping into the custom chip game too. This is actually great news for all of us – more players mean more competition, and that means we're not just stuck with Nvidia calling all the shots.

Observability: Too Many Cooks in the Kitchen?

Now, if you want a classic case of overinvestment, look no further than LLM observability tools. It's the tech equivalent of the California Gold Rush – everyone and their dog is trying to sell picks and shovels to the AI miners. The problem? There's not much to differentiate one from another, and existing players like Sentry and Datadog are already muscling in on the action. It's starting to look a bit crowded in here.

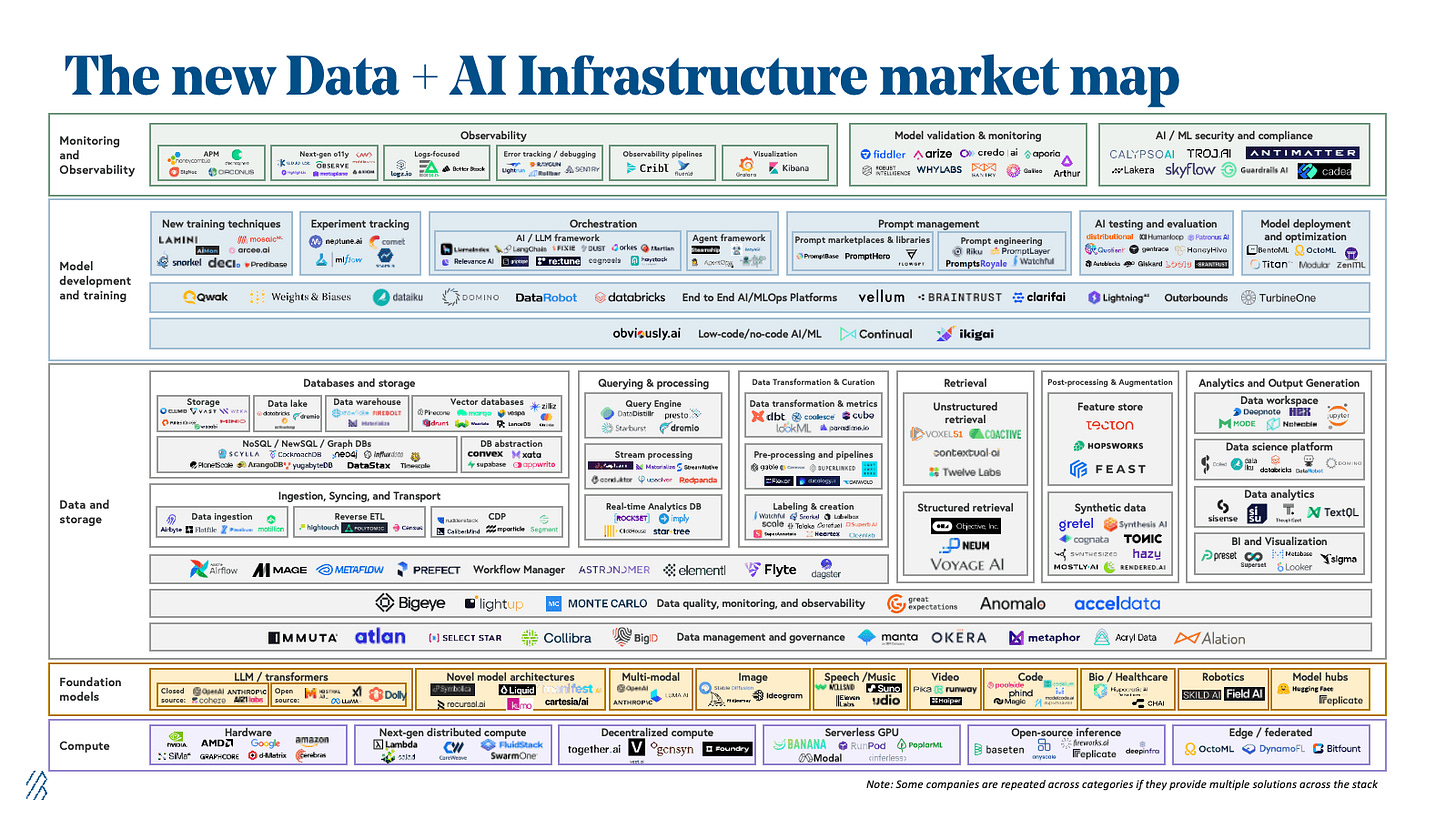

Credit: Bessemer

Applications: Still Finding Their Feet

When it comes to actual AI applications, we're still in the "figuring it out" phase. These apps are grappling with the current limitations of the tech, like struggles with long-term planning and reliability. But don't write them off just yet.

Take Devin, for example – an AI agent for software engineering from Cognition. It's early days, but as foundation models improve, these kinds of tools are only going to get more useful. We're watching the first wobbly steps of what could become a revolution in how we work.

Foundation Models: The Big Squeeze

Now, here's where things get really interesting. The space for foundation models is slowly but surely collapsing into an oligopoly. I actually called this one in my earlier article, "A Bird's-Eye View of the Competitive Landscape in AI". Those recent deals with Inflection and Character? They're not just splashy headlines – they're symptoms of this consolidation in action.

Tomorrow's Tech Today

So, what's the takeaway here? While we're definitely seeing some of that classic tech overspending, it's not a simple story of "AI bubble about to pop". Some areas are overheated, sure, but others are just warming up. The key is to look beyond the hype and see where the real value is being created. As always in tech, it's a wild ride – but that's what makes it exciting, right?

Look, we're still in the early days of AI, and if history has taught us anything, it's that the real magic happens after the initial gold rush. Want to see where this is all heading? Keep your eyes on the early adopters – they're blazing the trail. The bottom line? It's time to build. The AI revolution isn't just coming; it's here, and it's picking up steam. So whether you're a tech giant or a garage startup, the message is clear: jump in, the water's fine (and the chips are hot). Buckle up, folks – the future's looking wild, and I, for one, can't wait to see what's next.