Can OpenAI's o1 build an MVP in 5 mins?

Originally I planned to write on a different topic this week, but then OpenAI dropped a new model series, o1, which I had to try out.

What is o1?

First, why a new model series. OpenAI explains:

OpenAI o1 series models are new large language models trained with reinforcement learning to perform complex reasoning. o1 models think before they answer, and can produce a long internal chain of thought before responding to the user.

Essentially, these models are designed around giving the model time to "think" rather than answer immediately. The "internal chain of thought" (CoT) is notable in that CoT has been utilized in prompting for a while, now it comes natively to the model itself. OpenAI does not expose the internal chain of thought, but does provide a summary in ChatGPT (more on this later).

OpenAI highlights some key points about o1:

These enhanced reasoning capabilities may be particularly useful if you’re tackling complex problems in science, coding, math, and similar fields.

And:

For applications that need image inputs, function calling, or consistently fast response times, the GPT-4o and GPT-4o mini models will continue to be the right choice.

So these new models are not a complete replacement for other models, and are not strictly superior to previous model. Instead, they can be used in a more specialized role for planning and reasoning. Planning and reasoning is something that so far, application developers have had to engineer into their applications, so it's a big upgrade to have the model handle some of this (though I suspect most applications will still benefit from engineering bespoke CoT workflows ).

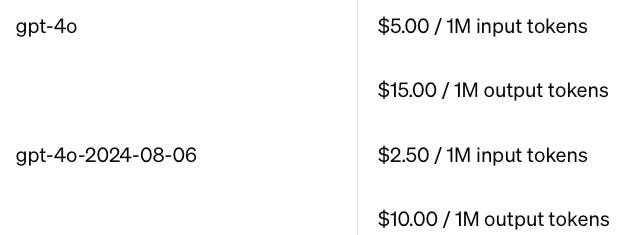

Along with the base o1 model, OpenAI also introduced o1-mini, which trades off some capability for better speed and latency. Speaking of cost, o1 is about 5-6x more expensive than GPT-4o. This further drives home the point that this model is not a drop-in replacement for all inference needs.

You can also try o1 and o1-mini through ChatGPT, though take note, the quota is much more limited:

Both o1-preview and o1-mini can be selected manually in the model picker, and at launch, weekly rate limits will be 30 messages for o1-preview and 50 for o1-mini.

Build me an app in 5 mins

To put the new o1 model through its paces, I decided to "speed run" creating a simple app as quickly as possible. I especially wanted to try this out because of o1's purported improvements in coding ability. Because o1 is more expensive and deliberative, this is not how I would actually design an application, but this is ok for fun little experiment.

Here's my prompt to Claude, which I used to refine my requirements for o1:

o1's limited quota makes every query feel very substantial, like firing a .50 cal gun. So I did some prep work in other LLMs to get o1's prompt just right. To quickly summarize: I wanted to create an app that could take a picture and output an ICS file (a standard calendar file format) that I can later import into my calendar (coincidentally Apple is bringing this feature to the new iPhone 16 through its visual intelligence feature). I'd be using GPT-4o and its new structured output feature to extract structured data from images. Structured outputs, released in August 2024, allow for more predictable and easily parseable responses from the model. As I'm often reminded, being on the cutting edge often leads to bleeding (more on this later).

After some back and forth with Claude, I got a quick requirements document that I then passed to o1. o1 did a good job initially, taking ~40 seconds to devise a plan and generate the necessary. I had a further back and forth with o1 in refining the specs, once the initial implementation was complete. The full conversation can be found here.

In the end o1 got about 90% to a working solution. It was very impressive, but still required my human input to refine its work. Besides the planning and prep work, o1 actually only took a few minutes. And o1 was exhaustive (when prompted appropriately), having generated a complete working structure:

Here's a screenshot of the working app:

I say it only got 90% there because the frontend had trouble sending data to the backend. Specifically, the date and time formats used on the frontend differed from the backend. This was exacerbated by subpar prompting that didn't extract very structured data. Ironically, the integration between frontend and backend is a common gotcha for flesh-and-blood developers too. Integration work remains a pain! Better data modeling and thinking about architecture would have avoided these issues, and those are precisely the skills great software engineers possess.

Observations and Learnings

I spent about an hour getting this all to work, and I was impressed with the initial results. That being said, it was great to see where there may be limitations for this new class of model.

Here are my initial impressions and insights:

As prescribed by OpenAI, o1 is best used as reasoner/planner, not for grunt work. Perhaps in my next experiment, I'll have it generate a detailed spec for GPT-4o to follow. My use of o1 as the "individual contributor" seems suboptimal. I suspected as much going in but it was good to experience first-hand.

Problem specification still matters a lot.

Using Claude / GPT-4o to refine your thinking and preparing it for a more capable reasoning model like o1 seems like a good tradeoff.

Using Claude / GPT-4o as a "thought partner" for refining your problem and the associated solution seems especially useful. I used these models not only to provide the final specification, but also to think through what it was I wanted out of my little app.

This part actually took the longest; it's why I think software engineering is going to evolve. Not everyone is going to want to sit down and deconstruct a problem. The skills of the best "traditional" software engineers and the software engineers of tomorrow are largely the same in this regard.

Like all models, o1 has a knowledge cutoff that hampered its ability to use the latest and greatest.

For this reason, RAG still has a very important role to play, even as models get more powerful.

Ironically, o1 experienced egregious hallucinations regarding AI model names and API calls. It kept wanting to use gpt-4, despite strong prompting indicating newer models with newer capabilities, like multimodality were available. I even provided snippets of documentation and told it to reference that.

It struggled with structured outputs especially, even when an example was specified.

While getting to a working prototype is very useful, iteration remains king.

I integrated some specific knowledge about LLM calls, like tuning the temperature parameter to better serve the task at hand. This is not something that's easy to discern early on.

"Last mile" knowledge like how to deploy an app is still immensely valuable and hard to prompt for.

Latency is very task dependent, which was cool to see. This makes sense, because like humans, some tasks require more thinking than others.

One response took ~40 seconds, another ~20 seconds.

This can also be problematic for real-time applications, but as mentioned before, o1 excels as reasoning, so real time is not the best use-case.

The speed at which o1 can generate a working prototype suggests that the role of software developers may evolve more towards problem definition, architecture design, and fine-tuning rather than writing every line of code from scratch. I did not review or care for any of the code generated.

Unrelated to anything I tried, but pointed out by a friend, o1 and o1-mini provide the most output token capacity of any OpenAI models (up to 65k tokens):

I speculate that o1 excels at "self-contained" reasoning work, as demonstrated by OpenAI's benchmarks in competitive coding, math and science. Software engineering entails a lot more than coding, including integration between different systems, requirements-gathering, and testing. With this lens, the shortcomings I observed are not surprisingly. Integrating HTML, JS and Python is often times annoying "integration" work that requires experimentation. Many times, after building a prototype you find that you underspecified your requirements, but the only way to find out is by doing.

All this being said, I'm less than 24 hours into using o1 so I'm sure I'll learn more about its optimal use over time. 😅

Conclusion

o1 represents a significant step forward in AI reasoning capabilities, but it's not a one-size-fits-all solution. Its strengths lie in complex problem-solving and planning, rather than rapid-fire tasks or real-time applications. As we continue to explore and experiment with o1, we'll likely uncover more optimal use cases and integration strategies. The future of AI-assisted development looks promising, but it's clear that human insight and expertise will remain crucial in navigating the complexities of software engineering.