From Doodle to Da Vinci: An AI Art Primer

Unleash Your Inner Artist

I've delved into AI art recently and discovered some great resources. AI art is a game-changer, offering unparalleled access to creative expression. While my guide is not comprehensive, it's an excellent starting point for anyone interested in exploring this field.

I've had a great time experimenting with AI art, and I encourage you to do the same!

game plan

Even though this space is in its infancy, the tools at our disposal already occupy a broad spectrum, so we have a lot to cover. I’ve organized tools by interest level and desired outcomes, so I encourage you to skip around:

doodlin’: the fastest way to get started. These toys require no assembly and batteries are included.

workin’: ready to level up? These tools provide workflows for prolonged, interactive, user-led creative sessions. They include power user features that facilitate professional work.

shippin’: Is it time to ship an application to production? You’ll have more sophisticated needs, and these tools will help get the job done. This is especially relevant if you are a developer, designer, product manager, or entrepreneur.

learnin’: AI art is rapidly evolving and there are few handy places where you can learn from other pixel Picassos.

doodlin’

For your first taste of AI art, I’d start with profile picture services. There are many, but the ones that standout are ProfilePicture.AI, Avatar AI, and Lensa AI (app store link). Getting started with these services is effortless as you only need photos of yourself, with minimal additional input. However, please note that it's essential to review the privacy policy of a service to ensure its retention policy aligns with your expectations.

To further your artistic journey, I recommend simple input services including:

Diffusion Bee: a Mac app that enables easy image generation with simple prompts.

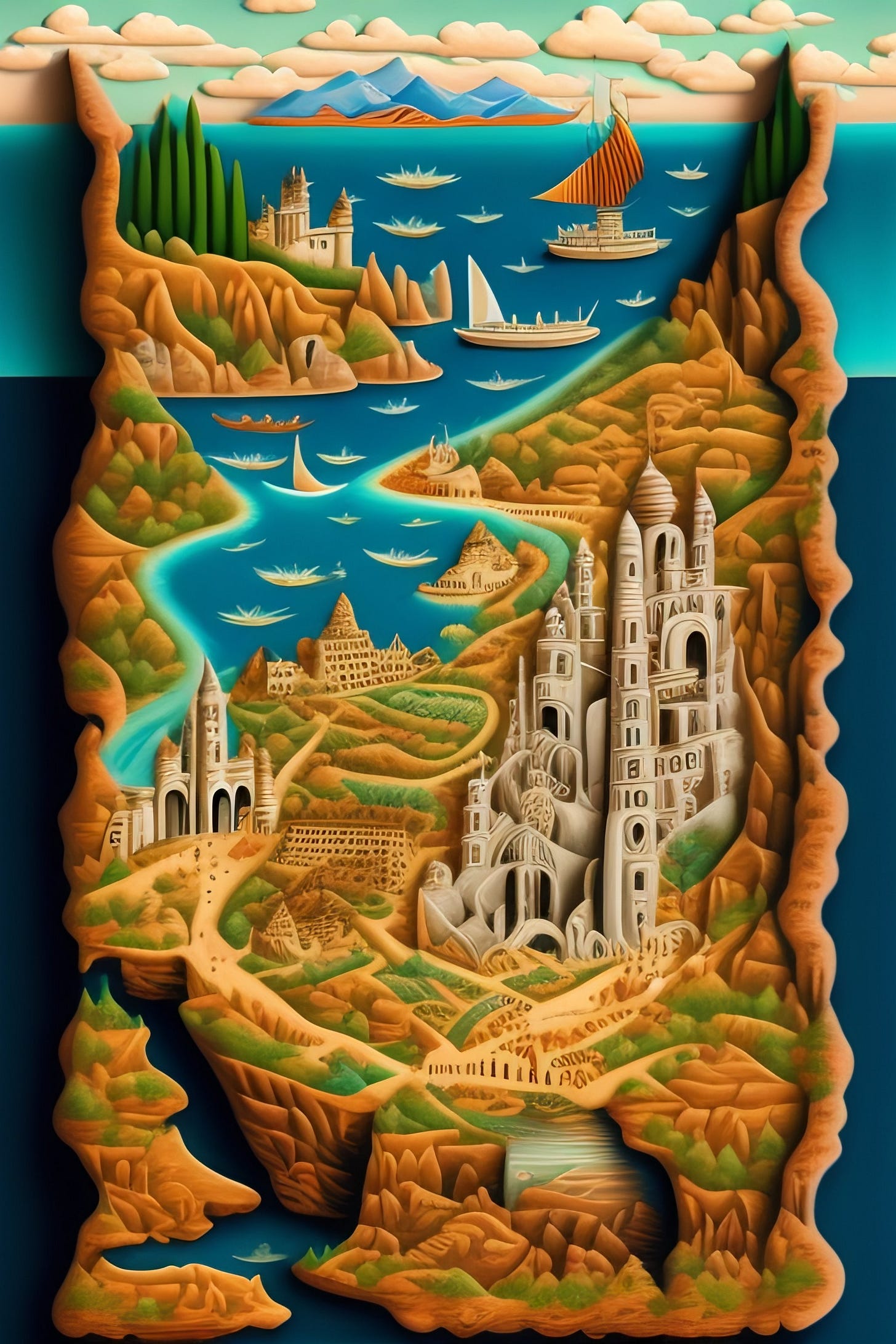

Scribble Diffusion: draw a doodle and get a picture! You have to try it to believe it.

Stableboost: edit your photos with simple instructions. This is similar to the profile picture services above, but with more control over the output.

Midjourney: one of the leaders in AI art. You provide prompts to a Discord bot and it generates beautiful images for you. MJ has a distinctive style that many enjoy.

DALL-E 2 by OpenAI is an honorable mention. It does not seem to enjoy the same mindshare, but functions very similarly and inspired many of the tools above.

An aside: Stable Diffusion (SD) is the open source model that launched 1000 generative art tools and backs most models.

I referenced “prompts” and “instructions” above as inputs these tools require to produce images. Prompt design is a little bit of an art (ha), which you learn incrementally and through others (see learnin’). When you want to continue your journey to digital Da Vinci, you’ll need more advanced features.

workin’

You’ll find that as great as the aforementioned tools are, they don’t produce the best results immediately. Like other creative endevours, AI art is highly iterative.

When you want to get a piece just right, the following tools are here to help.

Stable Diffusion web UI: the tool a lot of power users live by. It requires some effort to setup1, but is typically at the cutting edge of capabilities and advancements. On the other hand, the UX is not super polished, and it can be unstable. It’s open source, so dominates a lot of mindshare and has a huge community.

InvokeAI: the new open source kid on the block. It focuses on having a great UX, and stability, at the cost of slower feature rollouts. I think of it as a good compromise between commercial/proprietary offerings and SD web UI. It’s feature-ful but easy to pick up. It also requires more setup2 than the commercial options.

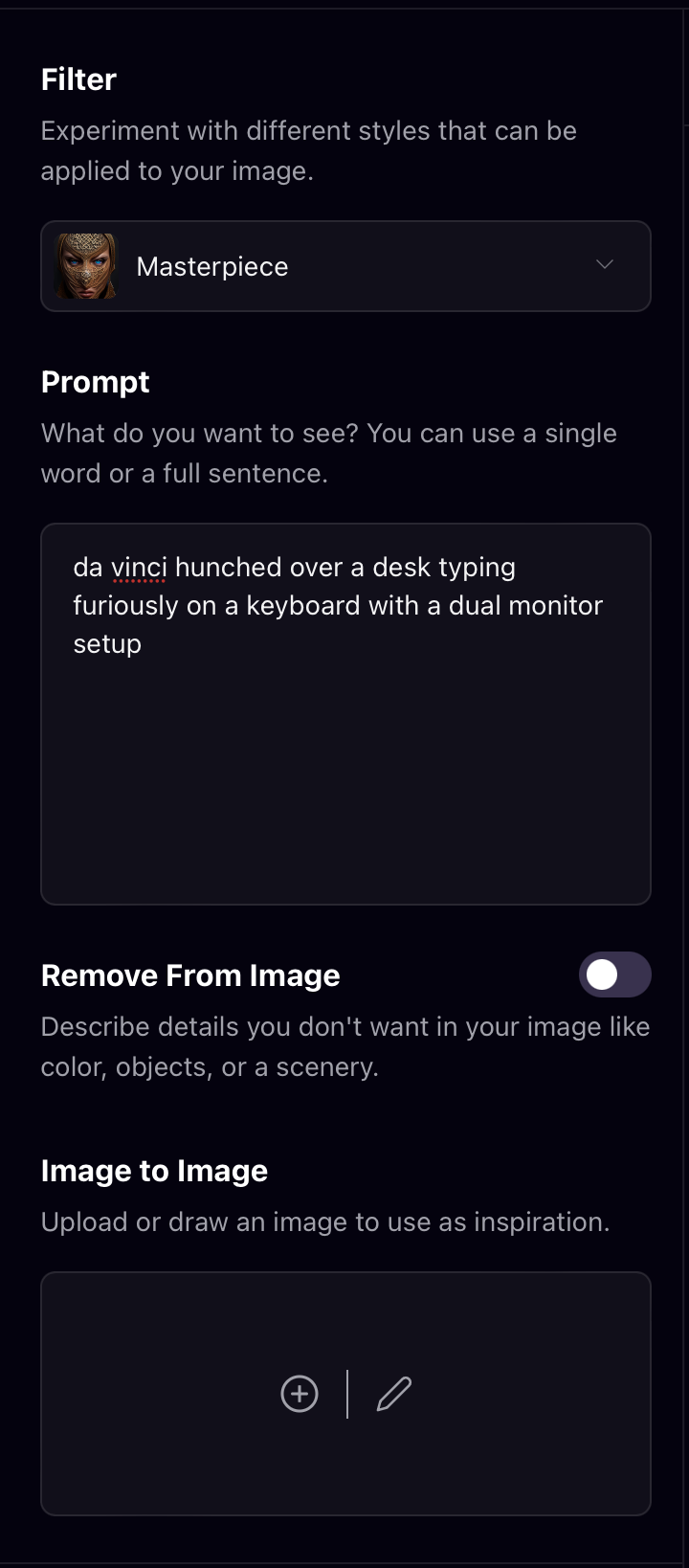

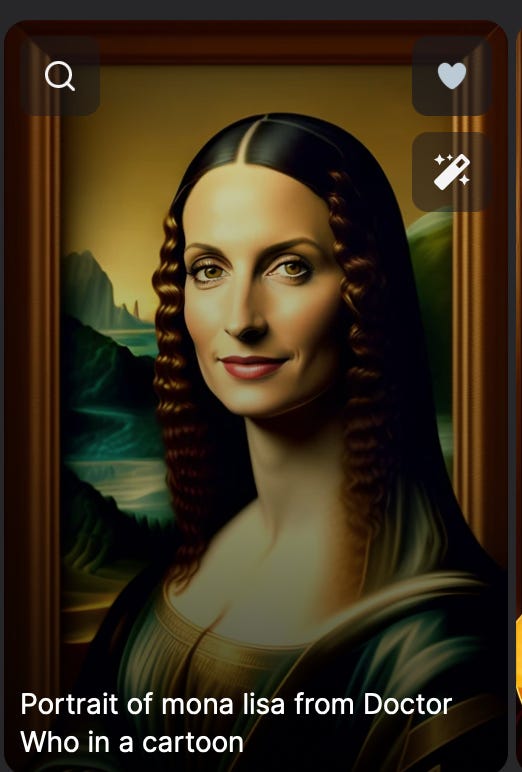

Playground AI: Makes it very easy to get started because its very close to a photo editor, with helpful hints and explanations. The UX is simply *chef’s kiss*. Has tons of sharing options, and a generous free tier.

Lexica: a commercial art generator that leverages a proprietary model. It emphasizes sharing of art and their corresponding prompts.

These tools provide the following advantages:

BYO model: The open source variants allow you to maintain a library of models (more on this later).

Editing features:

In-painting: tweak smaller parts of an image without regenerating everything.

Out-painting: generate content outside the generated frame. For example, this can be helpful if the original generate image feels off-center.

Image to Image: use an existing (or generated!) image as an input to the generation process. This enables rapid iteration on a concept because you don’t need to start from scratch.

Many others…

Post-processing features:

Upscaling: increase the limited resolutions of models (eg 512 by 512 pixels) to something you can publish and share.

“Face fixing”: tweaks human faces to be more realistic, like pupils that are centered.

Discovery: the commercial tools do a great job of surfacing different user-generated art. Sharing is very easy, and it’s fun to learn from others’.

Education: This varies by tool, but some do a great job of teaching newcomers. Playground AI in particular is great about guiding users through the creation process (eg prompt design, how to use editing features). Even when I’m not directly leveraging them, I take inspiration for prompts from Lexica and Playground AI (see learnin’).

These tools professionalize AI art and some power users are commercializing their work. That being said, your path to machine learning Michelangelo does not need to end here.

shippin’

I kinda lied when I said this was a progression. I suspect for many people the tools described in workin’ are enough. However, if you have a need to generate AI art at scale, you’ll need additional functionality.

finding models

First, let’s cover model directories. These sites curate open source models, created by organizations and ordinary users. Model publishers usually provide value by fine-tuning on a particular dataset. The model directories that stood out to me are:

Civitai hosts many user-created fine-tuned models. There’s a lot of experimentation going on here, but be forewarned that there’s a lot of NSFW content and models here.

Hugging Face is well known for hosting models of all kinds (not just image generation). While invaluable for discovering all kinds of foundation models, I’ve found it to be suboptimal for perusing image generation models specifically; other platforms showcase examples must better, and allow you to hop from model to model much easier (it’s very annoying for example that HF opens a new tab for each model you want to explore).

Replicate is similar to Civitai in terms of model exploration, though it’s selection is more limited. However, Replicate is awesome because it’s primarily a platform where you’re able to run models (more on this later).

models as a service

Once you find a model that’s suitable for your use-case and aesthetic, you’ll need to run it. The large cloud providers (AWS, Azure, Google Cloud) certainly have offerings that enable running models. However, I found the specialized providers offer a better developer experience and are easier to get started. These services allow you stand up an API based on a model in minutes rather than hours or days.

The services that stood out to me are:

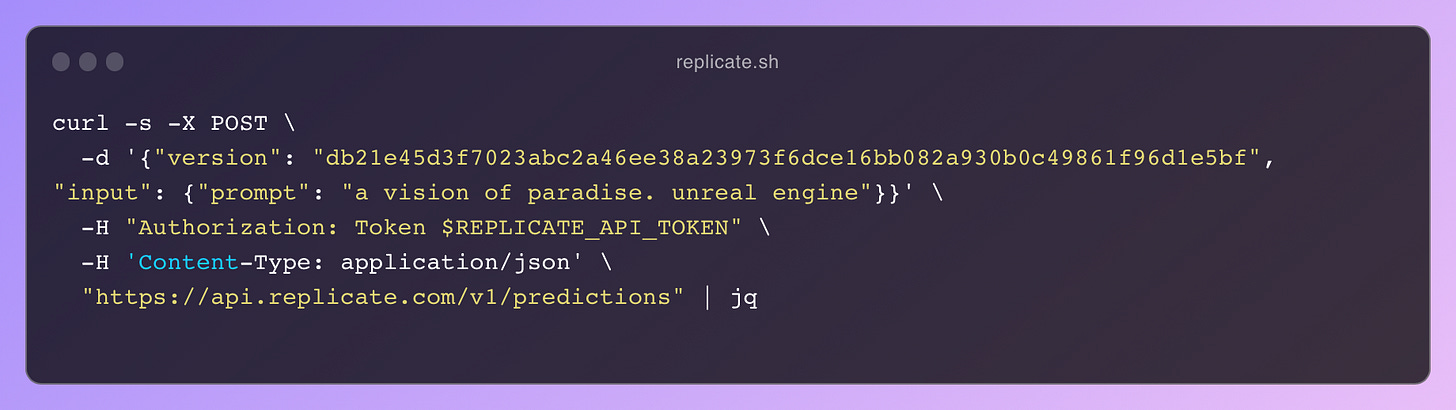

Replicate: As previously mentioned, Replicate is very good for exploring models, but their bread and butter is model hosting. You can use the models in their directory or upload your own. You pay based on seconds of compute, and models in their directory have helpful estimates of what you can expect in terms of cost. Replicate is essentially serverless. The only infrastructure choice you have to make is the GPU for your models.

Paperspace: offers less in the way of model discovery, but the platform is top-notch. It has more of an emphasis on infrastructure options, and is closer to the traditional cloud providers. You can pay for dedicated instances with GPUs. It also has a generous allotment for shared instances that fluctuate based on availability. Paperspace has many other offerings that may be valuable as your needs become more sophisticated (more below). I enjoy using Paperspace in particular for experimenting with code as their Jupyter notebook support is amazing. My mental model for using Paperspace is simply “I want a server with a beefy GPU.”

As a builder, these services are my favorite recent discovery because they facilitate rapid and cheap iteration because you pay for compute on a fractional basis.

scaling

Platforms that expose models as a service are great, but eventually you may need more. I actually haven’t reached that point personally, but it’s good to understand what’s around the bend.

The main reasons I think other options should considered are:

cost: the convenient options come with a higher unit cost. This is not surprising, as this mirrors the existing segmentation of cloud services; if you have a ton of traffic, it may be worthwhile to have dedicated instances or exit the cloud altogether. On the flipside if your application’s demand is spikey, higher unit costs may be a reasonable compromise.

control: services that abstract interacting with models have to make choices about what will run and how. If your application needs an esoteric software package you may need to have more control over the underlying infrastructure. Similarly, you may want low-level control of the constituent infrastructure components, like networking and storage.

This boils down to accessing GPUs, the core resource of model inference, and configuring the rest. Many refer to this as their “GPU Cloud” offering.

The options that I noted are:

Traditional cloud providers: AWS, Azure and Google Cloud offer a variety of hosting options that provide access to GPUs. They can be attached to traditional server instances, lambda functions, or containers. This offers the wider flexibility of a cloud provider, like the ability to configure services more precisely, and using other supporting infrastructure like object storage.

Lamba: specializes in GPU workloads, and boasts the most competitive pricing. They offer the flexibility of second-level pricing, and the cost-savings of dedicated instances. Interestingly, they also offer developer workstations catered to AI/ML workloads that leverage their hardware expertise.

Paperspace: also has its own GPU cloud, in addition to simpler model hosting as described above. I think a distinct advantage Paperspace has is that it’s a developer-oriented, “full stack” platform; you can deploy models easily and then “graduate” to it’s GPU cloud with minimal context-switching. In this sense I think of it as “GitHub for ML workloads”, in that you can accomplish much of what you need in one place.

You’ve made it this far, and as a digital palette padawan you’ll need to continue learning, and that’s what the next sections is about.

learnin’

As I alluded to earlier prompt design is important and an art unto itself. I find that the easiest way to learn what makes for a good prompts is by learning through others (mimetic learning at its best).

prompts

First, many generation tools do a great job of exposing the work of others. I’ve found Lexica and Playground AI are best in this regard. They make it easy to jump between related images and styles. They also make it very easy to create based on existing work. I love the idea of Tik Tok-like remixing of AI art.

There are also dedicated prompt sharing sites and marketplaces, like PromptBase, Public Prompts, and PromptHero. Personally, I don’t generally see the point of paying for prompts as there’s a lot of fun and learning to be had doing it yourself. However, I see these marketplaces as positive contributors to the ecosystem, especially for people who want a hands-off approach to generating AI art.

Prompt sharing sites include the model and versioning because prompts are not generally portable across models, especially because the models have different styles and are trained on different data. However, I like to take inspiration from prompts in a broader capacity, when painting broad (digital) strokes, such as when ideating on a concept. For example, Lexica’s model is proprietary but I enjoy looking through its art to get a sense of what aesthetic I’m targeting.

community

I’ve found the r/StableDiffusion subreddit invaluable to learn what’s new, and workflows for producing high quality images though this requires more effort. Similar to learning prompts, the learning is more holistic because others’ workflows don’t typically transfer one-to-one (and that’s a good thing!)

Small caution: because the space moves quickly, it’s easy to get overwhelmed with the shiny new thing, so I recommend rationing consumption otherwise you’ll be inundated with noise. Over time you’ll see long-term trends emerge (hello ControlNet).

creators

I’d be remised if I didn’t give shoutouts to creators doing an amazing job distilling new developments, and making them practical.

Josh at Mythical AI writes great articles on what’s new, and how to use it.

Sebastian creates great video tutorials leveraging these tools, like this one describing his own workflow for YouTube thumbnails:

Over on Twitter, DiffusionPics curates some of the best AI art:

closin’

AI art is very exciting, and while I tried my best to categorize these tools, the truth is much muddier. Many offerings tend to overlap in audience, and intended use-case. There’s overlap between what these tools help you accomplish. I expect the Cambrian explosion to continue.

No matter your goals, the tools for creative expression exist, and I encourage you to start creating!

If you choose to run an open source application, you should ensure you have a capable graphics card, though the requirements are fairly low for these tools. If you want a faster feedback loop (which you will once you’re addicted), I recommend using a service with dedicated GPUs like Paperpace. The GPUs they offer will make your generations fly. I think services like these fill an interesting niche in that they are easier to use than the existing cloud providers and offer an full-stack interactive development environment for AI/ML workflows.

See above on setting up an open source model.