LLM engineering: Context Windows

A new kind of code golf

Software engineering against Large Language models (LLMs) like OpenAI’s GPT-4 is very different than traditional software. We use natural language instead of formally-defined programming languages, which makes output non-deterministic.

One practical constraint when working with LLMs is the context window. The context window is the total number of tokens1 a model can process or generate at once.

Different models may have different context window limitations. GPT-3.5-turbo (one of the models powering ChatGPT ) has a 4096 token limit, while GPT-4 varies from ~8k to ~32k.

anatomy of a context window

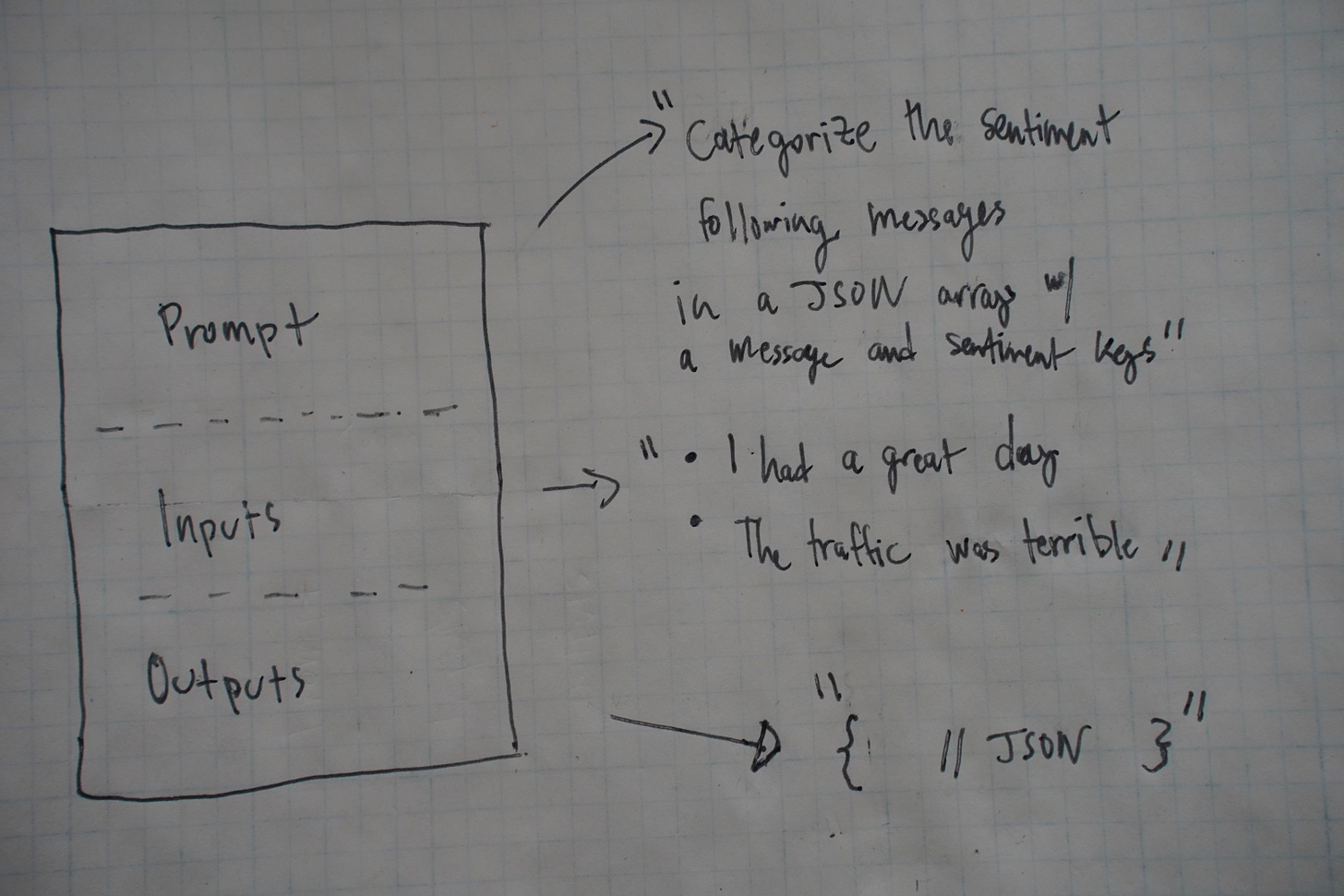

I think of context windows as follows:

Prompt

This is how a developer requests an LLM perform a task, and is the subject of “prompt engineering”. An example prompt might look like “find the relevant passages from the following texts that answers the user’s questions” or “generate funny company names given the following industry”.

Inputs

Any context or user input that would be useful for the LLM to generate useful output. For example, a question answering system, may include snippets of domain-specific documents. Because the context window is limited, there are various techniques to inject longer texts like documents, beyond naive “stuffing”. Inputs may also include examples, so the model better understands the task.

Outputs

The outputs are consumed by the application. It may simply be a response to a user’s message in the case of a chat app, or may be structured output like JSON. For example, a user-intent classifier may output the following:

[

{

"message": "I had a great day today!",

"sentiment": "positive"

},

{

"message": "The traffic was terrible this morning.",

"sentiment": "negative"

},

{

"message": "I'm so excited for my upcoming vacation!",

"sentiment": "positive"

},

{

"message": "My computer crashed and I lost all my work.",

"sentiment": "negative"

},

{

"message": "I received some unexpected good news today!",

"sentiment": "positive"

}

]The context window token limit corresponds to the sum of all these parts. These parts are mostly an abstraction, as the LLM is agnostic2 to the text is completes, though priming it certainly impacts results.

Challenges

So far, I’ve found context windows present the following challenges.

Prompt Engineering

Prompt quality is important in utilizing the limited context window to its fullest. Good prompts are ideally very concise, as to maximize the budget for user inputs and outputs. Simultaneously, good prompts are specific enough to guide the model to generate desirable output. This tension is why I think of context windows as a new kind of code golf; we want as few (key)strokes that are on target.

One easy question I ask myself when crafting prompts is whether I need structured output or not3, and plan accordingly; this saves a lot of downstream engineering time.

Context too long

An easy gotcha: when context is too long requests will fail. This is one major reason for more advanced techniques for injecting long context like documents. It is unlikely that a developer can pass in an entire real-world document like a PDF in a single request4.

Timeouts

If an application is not streaming token responses, a request might fail, even if the provided context is under the token limit. I’ve encountered responses that take several seconds to complete, especially with more advanced models like GPT-4.

Incomplete output

This occurs sometimes in ChatGPT where it stops generating a response unexpectedly. Choosing appropriate formats for structured output is crucial here. A “chatty” format like HTML expends a lot of the remaining token budget. This can be especially problematic if the output is structured and a complete response is required for proper processing (eg unmatched JSON curly brace).

Performance / confusion

In the case of chat apps, like ChatGPT and Google’s Bard the context window applies to the entire conversation. It is one reason LLMs may get “confused” in very long conversations, and developers employ different techniques to endow models with long-term memory. Memory is fascinating in its own right, as there are different techniques, so we’ll defer that to a future post.

Higher Costs

Many of the APIs, including OpenAI’s employ usage-based billing by the token. This means that using the entire context window may not be wise, especially for more advanced models. For large-scale applications ensuring API calls fit the cost structure is important.

In the next part, I’ll walk through a practical example: a simple scraper that leverages LLMs, and works within the constraints of context windows.

A token is a sequence of characters that represent a single unit of meaning in a piece of text. Per OpenAI, “as a rough rule of thumb, 1 token is approximately 4 characters or 0.75 words for English text.”

OpenAI has indicated GPT-4 adheres more closely to its system prompt though.

It’s not an all-or-nothing affair either. You can request both natural language and structured output.

This is ever-changing; GPT-4’s context window is huge at ~32k tokens.