o1: The Missing Link in AI Agency?

How OpenAI's Latest Models Could Usher in the Era of Autonomous AI Assistants

Last week, I discussed my initial impressions of OpenAI's new model family: o1. I deliberately used a toy example to put o1 through its paces, knowing full well that it might not be satisfactory for all use cases. This week, I'm diving into what I believe this enables: agents.

OpenAI's o1 and o1-mini models represent a potential paradigm shift in AI capabilities, bridging the gap between reactive language models and truly agentic AI systems. By providing superior reasoning and planning abilities, these models could catalyze the development of autonomous AI assistants capable of handling complex, multi-step tasks.

Quick Update: Improved Usage Limits

First, a quick update: the usage limits on o1 and o1-mini have been substantially improved for paying users. This is a great benefit as everyone learns to leverage these models.

The increased limit for o1-mini is particularly impressive, essentially enabling usage that's on par with normal models.

Speaking of o1-mini, I suspect it will be a sleeper hit. It's substantially cheaper but performs similarly to full-blown o1:

However, it's worth noting that o1-mini has a specific focus:

Due to its specialization on STEM reasoning capabilities, o1-mini’s factual knowledge on non-STEM topics such as dates, biographies, and trivia is comparable to small LLMs such as GPT-4o mini.

It remains to be seen how limiting this specialization truly is, but I'm optimistic that RAG can help fill in any knowledge gaps.

Defining AI Agents: More Than Just Chatbots

People have been discussing "AI agents" for well over a year now, and I would argue that their promise has largely been unfulfilled, except in very constrained use cases like customer support chatbots. To further complicate matters, there are numerous definitions of what constitutes an "agent".

Here's my attempt to define it as simply as possible:

An AI agent is software that can perform tasks or make decisions on behalf of a user, similar to how a person might delegate responsibilities to a trusted representative.

Examples of human agents include sales representatives, travel booking agents, and customer support representatives. AI agents can be thought of similarly: software designed to perform tasks typically associated with specific job roles. A key question to consider is:

Can this AI software effectively carry out the responsibilities of an existing professional role?

There are differing opinions on what constitutes an AI agent, but I like this definition for how parsimonious, predictive, and explanatory it is. It's straightforward to identify and evaluate an agent when it's getting the same work done as a person. I also think this is one of the holy grails of AI: having technology do impactful work for people.

One small wrinkle: I believe "impactful work" can happen entirely in the digital world and doesn't have to translate to the physical world. However, I think this expectation will change as robotics improves.

Prior Art and Current Developments: The o1 Advantage

With definitions out of the way, let's dive into how o1 might differ from existing LLMs. People have, in fact, been attempting to design agentic systems with LLMs for some time. Papers like ReACT proposed designs as early as October 2022. And as I mentioned previously, prompting techniques like chain of thought (CoT) has been widely adapted in AI-native applications for some time as well. What's different about o1 is that the "thinking" is performed natively within the model. This is somewhat analogous to multimodality in models: instead of needing text to be fed into them, some models can natively support audio and image input and output.

o1 learns "productive" CoT through reinforcement learning1:

Similar to how a human may think for a long time before responding to a difficult question, o1 uses a chain of thought when attempting to solve a problem. Through reinforcement learning, o1 learns to hone its chain of thought and refine the strategies it uses. It learns to recognize and correct its mistakes. It learns to break down tricky steps into simpler ones.

Having CoT natively confers a few advantages for applications as far as I can see: performance and latency. I suspect that OpenAI's massive user base through ChatGPT helped it in compiling useful data for its CoT reinforcement learning. This likely translates to better performance out-of-the-box than that which most organizations could achieve, simply because OpenAI has a data advantage.

CoT occurring at the model-layer may avoid performing RAG with additional context, and its associated database lookups. This might reduce the number of roundtrips to OpenAI's API, and by extension perceived latency. However, it should be noted that the o1 family of models does not have consistent performance; different requests/queries may lead to different overall latencies.

These advantages notwithstanding, I intuitively believe most applications would still benefit from domain-specific CoT. The reason is straightforward: a lot of the o1 model family's CoT learning has been centered around specific technical domains. OpenAI emphasized benchmarks and reasoning ability for STEM. However, I'm excited for more research into broader reasoning ability. It's possible these models would benefit from transfer learning; having strong analytical skills in a handful of technical domains may translate to reasoning competency in other domains. This is similar to humans: your friend who's a talented musician may also be a proficient programmer.

From Language Models to Autonomous Agents

While LLMs have shown impressive capabilities, they often fall short when it comes to complex reasoning and planning. This is where OpenAI's o1 models could be truly transformative. To understand why, let's dive into a useful mental model: system 1 vs system 2 thinking.

System 1 vs System 2: A Tale of Two Thinkers

You might be familiar with the concept of system 1 and system 2 thinking, popularized by Daniel Kahneman in his book "Thinking, Fast and Slow." If not, here's a quick primer:

Think of System 1 as your brain's autopilot. It's quick, intuitive, and doesn't require much effort. It's what you use when you're driving a familiar route or recognizing a friend's face. It's all about snap judgments and gut feelings.

System 2, on the other hand, is more like your brain's analytical powerhouse. It kicks in when you need to focus, solve complex problems, or make careful decisions. It's slower but more deliberate and logical. This is what you're using when you're doing your taxes or learning a new skill.

The cool thing is, we use both systems all the time, often without even realizing it. They work together to help us navigate the world. Sometimes System 1 can lead us astray with biases or rushed judgments, and that's when it's good to consciously engage System 2 to double-check our thinking.

LLMs: The System 1 Thinkers of AI

Traditional LLMs, in their raw form, excel at System 1-like thinking. They're great at rapid-fire responses, pattern recognition, and generating human-like text. This is why they're so good at tasks like casual conversation, creative writing, or quick information retrieval.

However, when it comes to tasks requiring deep analysis, step-by-step reasoning, or complex problem-solving, these models often fall short. We've been engineering workarounds, like chain-of-thought prompting, to coax more System 2-like thinking out of them. But it's been an uphill battle.

Enter o1: The System 2 Powerhouse

This is where o1 could be a game-changer. By incorporating chain-of-thought reasoning directly into its architecture, o1 is poised to become a powerful System 2 thinker right out of the box. It's not just spitting out pre-learned patterns; it's actively reasoning through problems.

Think about the best colleagues you've worked with. They weren't just good at executing specific tasks, right? The truly exceptional ones excelled at planning, prioritizing, and exercising good judgment. They didn't just have knowledge; they knew how to apply it effectively.

That's what o1 brings to the table. It's like having a highly effective manager on your AI team. It can break down complex problems, plan out approaches, and guide other AI components to execute those plans.

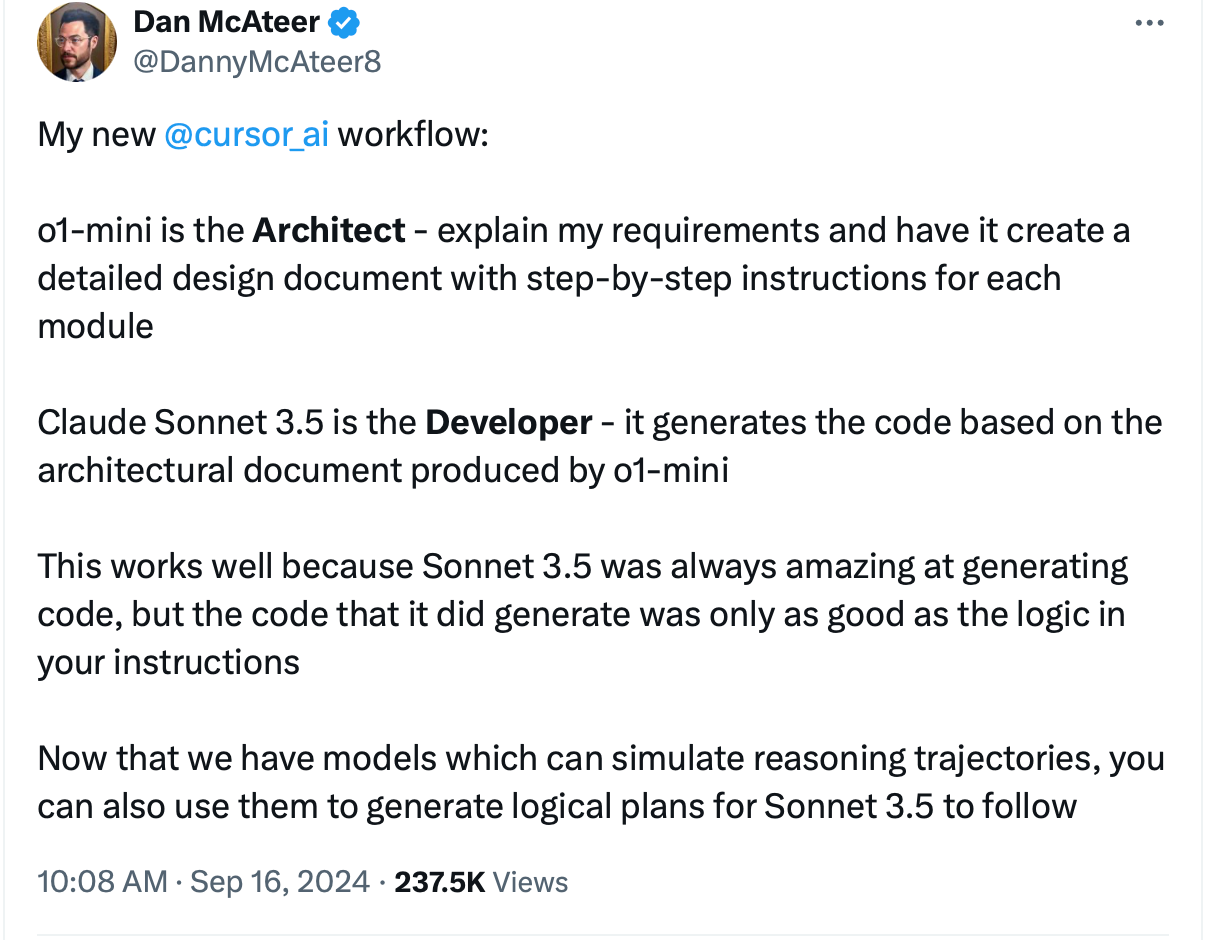

A Symphony of Models

Here's where things get really interesting. I don't think o1 is going to replace other models entirely. Instead, I see a future where we leverage multiple specialized models in concert:

o1 as the "reasoning engine" and planner

GPT-4o or even 4o-mini as the "front office" for conversation and user interaction

Specialized models for specific tasks (e.g., code generation, data analysis, document processing)

Dedicated RAG pipelines for injecting domain-specific knowledge

Imagine o1 as the conductor, orchestrating this AI symphony to tackle complex, multi-step tasks that were previously out of reach for autonomous systems.

The Path to True AI Agents

This multi-model approach, with o1 at its core, could be the missing link in creating truly autonomous AI agents. We're talking about systems that can:

Understand a user's high-level goals

Break those goals down into actionable steps

Determine which specialized tools or models to use for each step

Execute the plan, adjusting course as needed

Deliver results in a user-friendly manner

It's not hard to see how this could revolutionize fields like personal assistants, customer service, or even software development. The AI agent of the future might use o1 to plan out a complex task, GPT-4o to handle user communication, and a variety of specialized models to execute individual steps.

Of course, we're still in the early days. There will be challenges to overcome, from model integration to handling edge cases. But o1's ability to bring robust, built-in reasoning capabilities to the table is a significant step forward on the path to truly autonomous AI agents.